High Availability on Heroku

Our integration APIs are hosted on Heroku. these are the APIs that are consumed by our partners. there’s only one active instance per each API. that sounds like a single point of failure, doesn’t it? we planned to increase the number of servers to two in order to make them high available.

What is Heroku

Heroku is a Platform as a Service (PAAS). they provide you the hardware and software and you just need to write the code

Generally cloud services are in the following categories

Infrastructue as a Service: AWS, GCP, Azure

Platform as a Service: Heroku, AWS Elastic Beanstalk, Goolge App Engine

Software as a Service: Salesforce, Dropbox, Google Workspace

What is High Availability

What does it mean when you read “An Application is High Available” it means it’s available and operational in case of an error or disasters or high traffic.

How to make an applicaiton or a service high avaialble?

Having redundant components is a common practice to achieve high availability.

In general the high availability models can be characterized into four categories

- load-balanced: spread the traffice into two or more servers. this way no single server is overwhelmed and there’s no single point of failure

- hot standby: configure a backup node and route traffic to that node when the main node fails

- warm standby: the backup node process is not in a running state. it needs some time to get into that state

- cold stand-by: the node is off. in the case of primary node failure it needs some time to get into a running state

For our case hot standby works better comapring to other. we just need a backup node in case of failure.

As an example, Server availability or uptime is measured in percentages. it shows the percentage of time that the system is fully operational

| Availability (%) | Downtime per Day | Downtime per Month | Downtime per Year |

|---|---|---|---|

| 99.0 | 14 minutes and 24 seconds | 7 hours and 12 minutes | 3 days, 9 hours, and 36 minutes |

| 99.9 | 1 hour and 26 minutes | 3 hours | 8 hours and 45 minutes |

| 99.99 (four nines) | 5 minutes and 16 seconds | 1 hour and 26 minutes | 52 minutes and 34 seconds |

| 99.999 | 31 seconds | 5 minutes and 16 seconds | 5 minutes and 16 seconds |

| 99.9999 | 3 seconds | 31 seconds | 31 seconds |

| 99.99999 | 0.3 seconds | 3 seconds | 3 seconds |

Heroku doesn’t offer high availability in place. so our option was to deploy our application in two different regions.

Heroku uses container model to run applications. a Dyno is an isolated Linux container. Heroku is spread across multiple AWS availability zones. Dynos are locked to a single region.

There’re two regions US or EU that you can chosse from.

Our first solution was to use DNS failover. if the primary node is not available the traffic goes to the backup node.

What is DNS failover?

When you type a URL your browser communicates with the configured DNS server on the system to get the IP address of the server that hosts the website. this IP exists in the A and AAAA records. the latter returns an IPv6.

how we can leverage DNS for high availaibity? what if the website nameserver knows which server is healthy and returns the healthy server IP?

Your domain DNS provider should offer DNS failover. you can configure multiple IPs for a domain. in response to a query it returns the healthy node’s IP first and then the rest. we’re using Constelix and it offers a few strategies on how to order and return IPs. the consumer which in our case is a browser always use the first IP to reach to the website.

A common recipe is to have a base domain like integration.x.y.z and configure the redundant servers to accept requests for that base domain. so our redundant servers are integration-us.x.y.z and integration-eu.x.y.z.

The catch is the Host header. if you ever to setup a web server, you configure it using virtual hosts. it’s a concept that enables a server to host multiple websites. how does the webserver know which one to server? based on the Host header

For our case we also need to configure our redundant servers to serve for integration.x.y.z however in Heroku we don’t have the control

So let’s walk through a request cycle for our HA setup. the browser opens integration.x.y.y.z our DNS failover setup returns the first healthy node IP which is a Heroku instance serving under integration-us.heroku.com.

the IP of that instance is returned then the request goes to that IP. don’t forget that the Host header is set to the initial domain.

when the reqeust goes to the Heroku router which sends the request to the actual instance it sees the Host header set to integration.x.y.z and guess what? it doesn’t exist on Heroku!

We know that it’s possible to connect Heroku apps to a domain. what if we could connect both apps to the same one? but, it’s not feasible. the domain should be unique because of the heroku routing.

The conluction is that DNS failover doesn’t fit to our setup.

We need to somehow change the Host header and send the request. this is where Fastly comes into picture as mentioned in the docs

We’re using the load balancer capability of Fatly. we’ll configure it to route the traffic to the primary node if it’s healthy otherways the traffic should flow to the backup node.

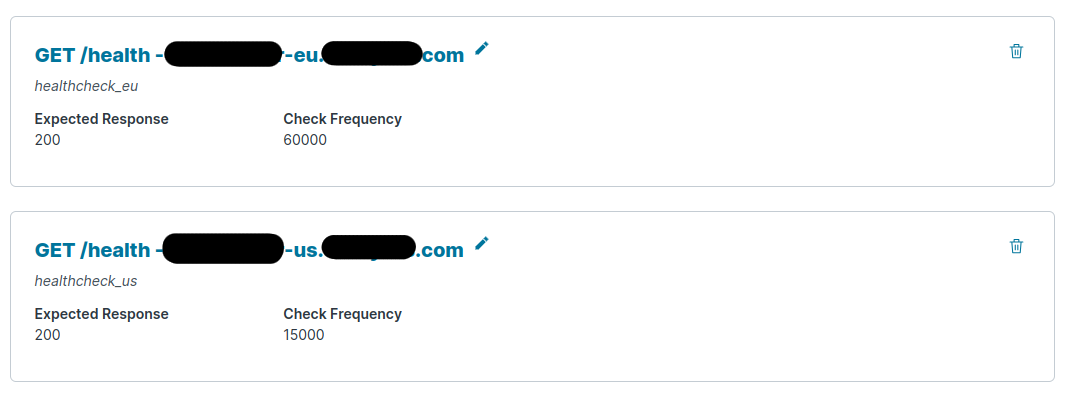

First we need to add the domain to Fastly. then in the Origins section define two health checks. in the Hosts add the hosts. the important config is to set Override host to the actual host name which is integration-us.heroku.com and for the backup is integration-eu.heroku.com otherwise the initial domain name will be set as host name.

We need to write a script to route the traffic based on the health of our primary node. go to Custom VCL and add the following

sub vcl_recv {

#FASTLY recv

# The default backend is always us unless the cookie is set

set req.backend = F_backend_us;

# The app relies on session cookies to load the agent information. for this reason the request should be routed to the same backend for the whole session.

# Unset the cookie for the initial request. this is to make sure the first request is always routed to the us backend

if (req.url.path == "/") {

unset req.http.Cookie:backend;

}

if (!req.http.Cookie:backend) {

if (backend.F_backend_us.healthy) {

set req.backend = F_backend_us;

} else if (backend.F_backend_eu.healthy) {

set req.backend = F_backend_eu;

}

} else if (req.http.cookie:backend == "us") {

set req.backend = F_backend_us;

} else if (req.http.Cookie:backend == "eu") {

set req.backend = F_backend_eu;

}

return(pass);

}

sub vcl_deliver {

#FASTLY deliver

if (req.backend == F_backend_us) {

add resp.http.Set-Cookie = "backend=us; path=/; samesite=none; secure;";

} else if (req.backend == F_backend_eu) {

add resp.http.Set-Cookie = "backend=eu; path=/; samesite=none; secure;";

}

}

The integration is called with some arguments that is stored on the cookie. so the latter requests should be served via the initial server. if in the second request the first node that served the request wasn’t available redirecting the request to the second node doesn’t make sense as it doesn’t have that cookie.

The last step is to connect domain to fasly which is described here.

Let me know in the comments if I need to expand on any part of the process.

The terms node, server and instace all refer to the same concept in the scope of this article.